LinkedIn, Apache Pig, and Open Source

Code Alert! This is a part of our continuing series on Engineering at LinkedIn. If this isn’t your cup of Java, check back tomorrow for regular LinkedIn programming. In the meanwhile, check out some of our latest product features, tips and tricks, or user stories. - Ed.

One of the reasons I joined LinkedIn Analytics is its commitment to open source. At LinkedIn, we love open source. We’re committed to contributing to Hadoop and Pig and giving back to the open source community through projects like Azkaban and Voldemort. We are determined to provide the open source community with the complete and painless data cycle that we enjoy - to enable even casual hadoop users to analyze data from their application at scale, to mine it for value and store it easily and reliably so that it can drive use and close the data loop. Look for new open source tools and projects from LinkedIn Analytics in the coming months that will help make this possible!

Hadoop drives many of our most powerful features at LinkedIn. About half of our Hadoop jobs are submitted by Apache Pig. This means that along with Azkaban and Voldemort, Pig is a large part of LinkedIn’s data cycle - the process behind features like People You May Know and Who Viewed My Profile.

LinkedIn and Apache Pig

I have used Pig intensively for about a year. During that time, I have come to love Pig for what it enables me to do: easily manipulate my data at scale, to turn raw data into data products. If Perl is the duct tape of the internet, and Hadoop is the kernel of the data center as computer, then Pig is the duct tape of Big Data. Pig lets me easily flow my data in parallel with simple commands. It lets me flow my data through dynamic languages like Python if I want to use SciPy, through simple UDFs in Java if I want to use a function repeatedly and share it with others, and ILLUSTRATE lets me check the output of my lengthy batch jobs and their custom functions without having to do a lengthy run of a long pipeline. Taken together, these features enable me to be productive.

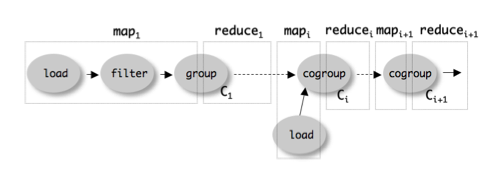

I learned Pig not because I had a big data problem, but because I wanted to build a better interface for Hadoop (see: PigPen, WireIT, this demo video, this code). For a long time, I did not delve very deeply. There was no reason to do so: I didn’t have to know how to code in MapReduce - Pig ‘just worked.’ I issue SQLish commands in Pig Latin, and Pig parses these commands and creates and submits MapReduce jobs for me. This saves me from having to think too hard about the complexity of Java, MapReduce or Hadoop. I don’t like to think about anything but the problem I’m actually solving, and so while I have written Algebraic MapReduce jobs as Pig UDFs, I am unlikely to ever write a Java Hadoop job unless I absolutely have to.

Apache Pig is now fairly robust, but data-flows themselves can get complex fast. I’m pretty fluent in Pig Latin, but my code in any language rarely runs on the first try. With batch computing, running jobs repeatedly to debug them can take a long time and slow development to a crawl. One must often massage the Pig to command its will.

When I write Pig Latin code beyond a dozen lines, I check it in stages:

- Write Pig Latin in TextMate (Saved in a git repo, otherwise I lose code)

- Paste the code into the Grunt shell - Did it parse?

- DESCRIBE the final output and each complex step - Did it still parse? Is the schema what I expected?

- ILLUSTRATE the output - Does it still parse? Is the schema ok? Is the example data ok?

- SAMPLE / LIMIT / DUMP the output - Does it still parse? Is the schema ok? Is the sampled/limited data sane?

- STORE the final output and see if the job completes.

- cat output_dir/part-00000 (followed by a quick ctrl-c to stop the flood) - Is the stored output on HDFS ok?

When you first tackle a complex task with Pig, that last step rarely happens on the first few tries. In time, you get more proficient.

As an incurious Pig user, I thought of Pig as a black box: a program with a command line. Nevertheless, I got to know the idiosyncrasies of each version as Pig matured from version 0.2 to 0.7 - unfixed bugs, unusual behaviors, and undocumented limitations. I never knew exactly why Pig behaved as it did, but I learned to get along with it.

Working on Pig

Several months ago I decided to work on the Pig project and I’m going after low hanging fruit the committers haven’t gotten around to and leaving the tough bits to them. Log analysis is a common use of Pig, and logs usually contain timestamps, so I want to add a Joda-Time DateTime data type to Pig.

But that is way too hard, so I’m going after boolean first. I checked out the code. I worked on it all weekend. I made a patch. I made many patches, actually. Time and again, I thought I was done, but I wasn’t. Booleans would load in grunt, so I thought it worked - but they wouldn’t store. I added physical storage code, so I could load and store. I emailed the LinkedIn Hadoop users list proclaiming victory… but it wouldn’t work on Hadoop. So I added Hadoop storage code, and it would load and store on Hadoop - but I couldn’t use operators to check for equality. I added code for ILLUSTRATE and it would illustrate, but I still couldn’t use booleans in a real job. This went on and on, and remains a work in progress.

During that weekend of long and frustrating hours of Pig hacking, the pattern became familiar. I was interacting with a different part of Pig each time I got a new kind of error. The hops from package to package in writing the patch corresponded to the stages of my long hours of stepwise data-flow checks in Grunt, as I had written Pig scripts most days over the course of the last year.

From a user’s perspective using the Grunt shell, this system seems like a cohesive entity - a single program - a complete (and somewhat irrational) Pig. It doesn’t seem that way anymore. Now that I’ve read the code, using Grunt is different. Knowing the way it all fits together at a high level - by tracing exceptions and seeing the package names of classes I’ve failed to implement because I didn’t know they existed or were required - I know that pig is actually segmented into many logical parts, independent arms that verify and process Pig Latin code independently and in different ways. The interface presented by grunt presents an illusion of wholeness that a deeper understanding of pig makes transparent - clear as illusion.

The Data Revolution

For me, understanding my work over the last year by understanding Pig was profound. It gave it more meaning, because strangely enough Pig has become a big part of my life. I’ve never much contributed to open source before, and I’m glad to be transitioning from a passive consumer of other people’s work to an active participant in an open source project. It is good to create openly, to give back. Open source is technical righteousness.

But more than that, this is an important time in computer science, and unlike many previous technical revolutions, this one is happening completely in the open. Like the integrated circuit before it, MapReduce is producing a paradigm shift that opens broad opportunities to produce new kinds of products from our massive collective backlog of data to help people in new and unprecedented ways. At LinkedIn we’ve amassed the world’s premiere data-set on the labor of professionals, and it is the mission of LinkedIn Analytics to leverage that deeply meaningful data to provide insight and value to our users. At LinkedIn Analytics data processing is both personal and meaningful, as the features we create enhance the working lives of tens of millions of people.

The Integrated Circuit solved the Tyranny of Numbers and unleashed Moore’s law, enabling a computerized, networked society. It did so with the considerable overhead of patent licensing and litigation. MapReduce is solving the Tyranny of Threads, enabling any company to process data at scale in parallel to extract real value from our most abundant and underutilized resource: information. It is doing it in the open, through free and open-source software, through the Apache Foundation, Hadoop and its sub-projects. We’ve gotten more efficient organizationally this time around.

If you love open source and you love big, meaningful data - we need you. Come join us. LinkedIn Analytics is hiring!

(Shout outs to Pete Skomoroch for acting as late-night editor, helping me dramatically improve this post!)